**More updates coming soon...**

I am Nikhil Verma, a Ph.D. student at Carnegie Mellon University. I graduated from the National Institute of Technology majoring in Biomedical Engineering.

My current research interests include Neural Engineering; Neuroprosthetics; Neuromodulation; Brain-Computer Interface; and Artificial Intelligence. Here at CMU, I am currently exploring the

use of spinal cord stimulation to improve motor control after paralysis due to stroke and spinal cord injury.

During the course of my Undergraduate degree, I have had vast areas of interests. I have worked on all three

aspects of HCI- Sensing, Machine Learning, and Sensory Augmentation. I have also worked on Plant-computer

Interface, Plant-Human interface, Computer Vision, and Deep Learning.

I have been especially enthralled by recent developments in the field of Brain-Computer Interfaces, Neural

Engineering and Artificial Intelligence. In my fantasy world, I see these two combine, working in symbiosis

with each other. I believe this would hit a notch in human evolution augmenting his physical and cognitive

abilities.

Date:

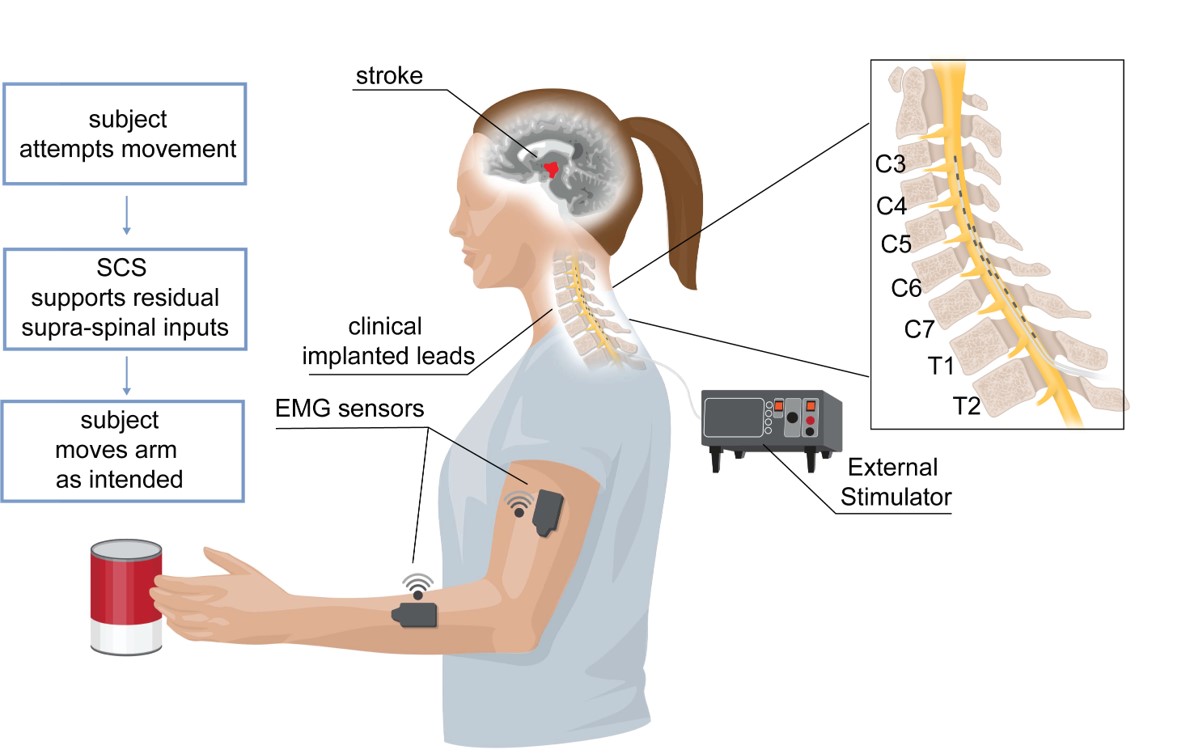

Epidural Spinal Cord Stimulation to Restore Movement after Stroke

Fig: Illustration of Epidural SCS system for movement restoration after stroke

Cerebral strokes can disrupt descending commands from motor cortical areas to the spinal cord, which can result in permanent motor deficits of the arm and hand. However, below the lesion, the spinal circuits that control movement remain intact and could be targeted by neurotechnologies to restore movement. Here we report results from two participants in a first-in-human study using electrical stimulation of cervical spinal circuits to facilitate arm and hand motor control in chronic post-stroke hemiparesis (NCT04512690). Participants were implanted for 29 d with two linear leads in the dorsolateral epidural space targeting spinal roots C3 to T1 to increase excitation of arm and hand motoneurons. We found that continuous stimulation through selected contacts improved strength (for example, grip force +40% SCS01; +108% SCS02), kinematics (for example, +30% to +40% speed) and functional movements, thereby enabling participants to perform movements that they could not perform without spinal cord stimulation. Both participants retained some of these improvements even without stimulation and no serious adverse events were reported. While we cannot conclusively evaluate safety and efficacy from two participants, our data provide promising, albeit preliminary,

Date: October 2023 -Present

Myoelectric interfaces for inclusive human-computer interactions

A little something about the project

More info coming soon...

Date:

Portable Intracortical Brain-Computer Interface System

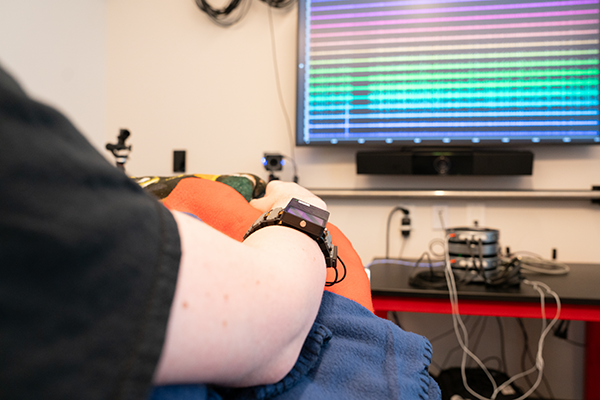

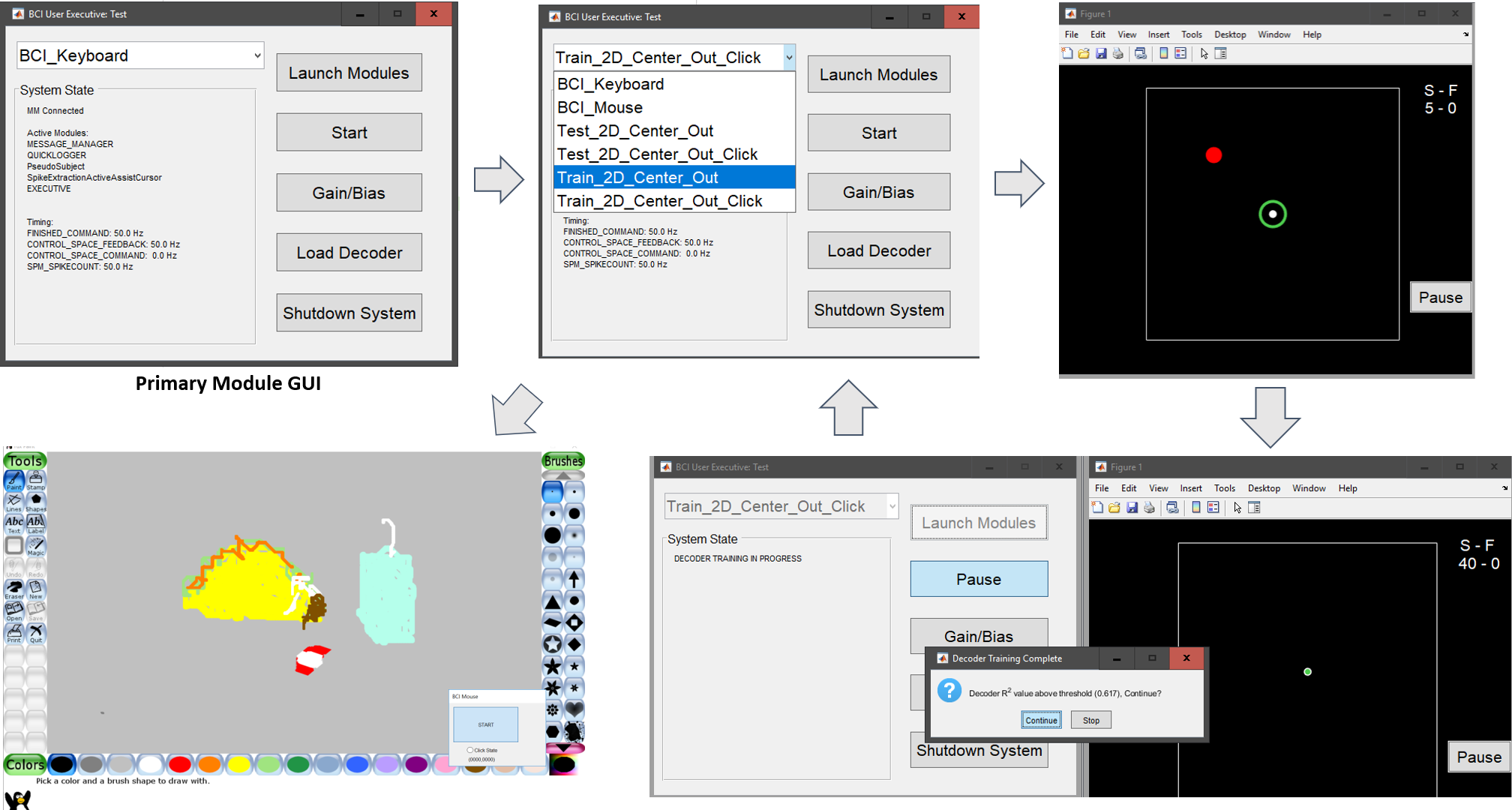

Fig: Participant using Portable iBCI system

Intracortical brain-computer interface (iBCI)

research has demonstrated the ability for peoplewith paralysis to control computers. However, most of

these demonstrations have been limitedto lab settings and there has been a gap in bringing it to the

end-user. The majority of thesestudies have evaluated the system based on its usability. However,

these cover only a part ofuser experience namely the pragmatic qualities, and not its hedonic

qualities. In order to designa system that is user centered, there needs to be an iterative design

process between thedeveloper and the user, which addresses the whole user experience.

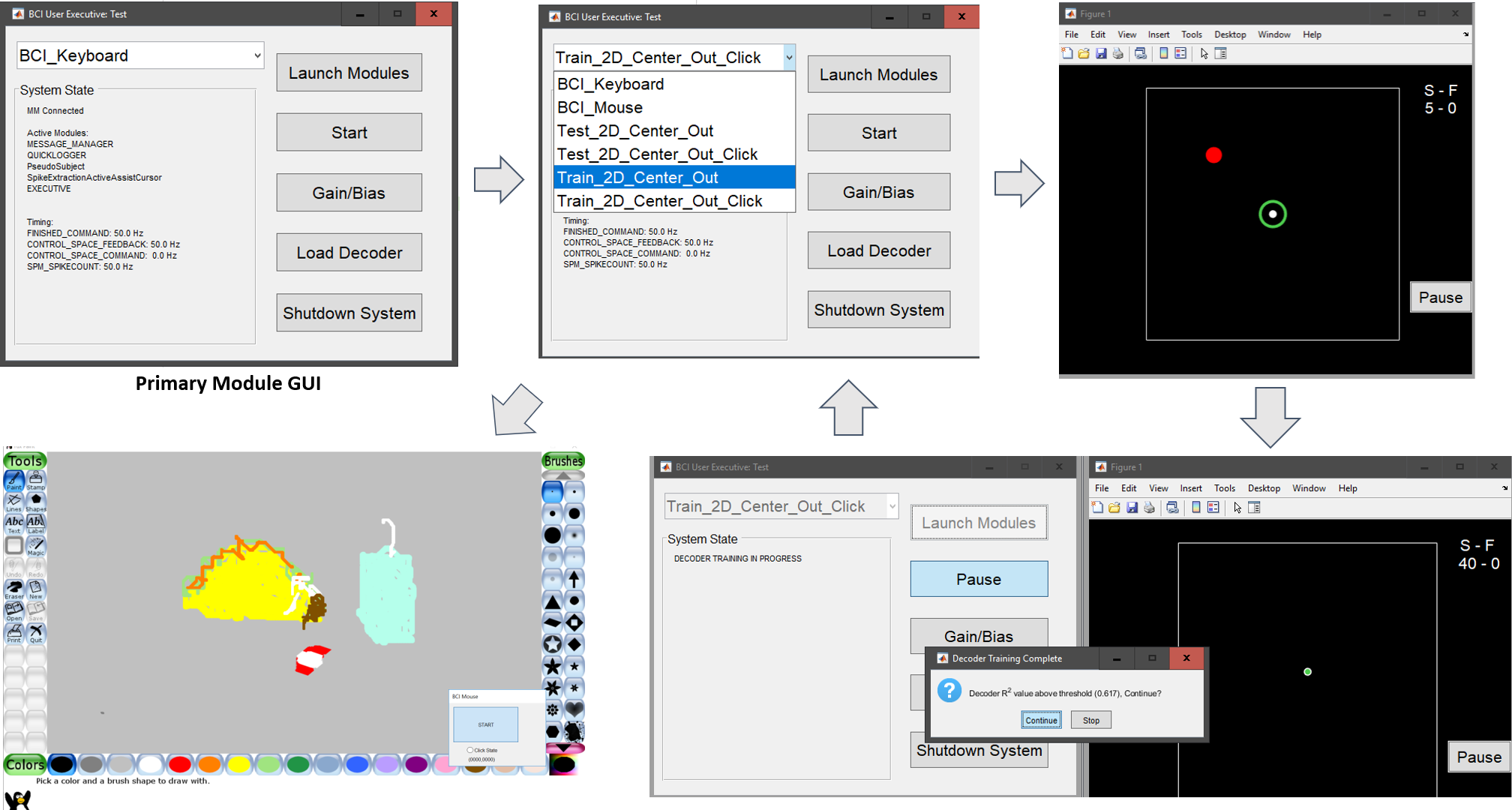

I worked on the graphical user interface and development of the interface between differentsoftware

modules for controlling a tablet PC through brain computer interface. The goal was totransition from

an experiment system, where the developer setup the task for the participant to asystem available to

an end user where the participant to can independently setup (maybe withthe help of the user) and use

the system at home. When the user was able to use the system, itwas evaluated addressing the whole

user experience. The iBCI user experience was thencompared to that of touch screen input by a

participant with tetraplegia who retained the use ofhis proximal arm but had impaired hand function.

Fig: Graphical User Interface of the system and painting made by the participant using BCIcontrol

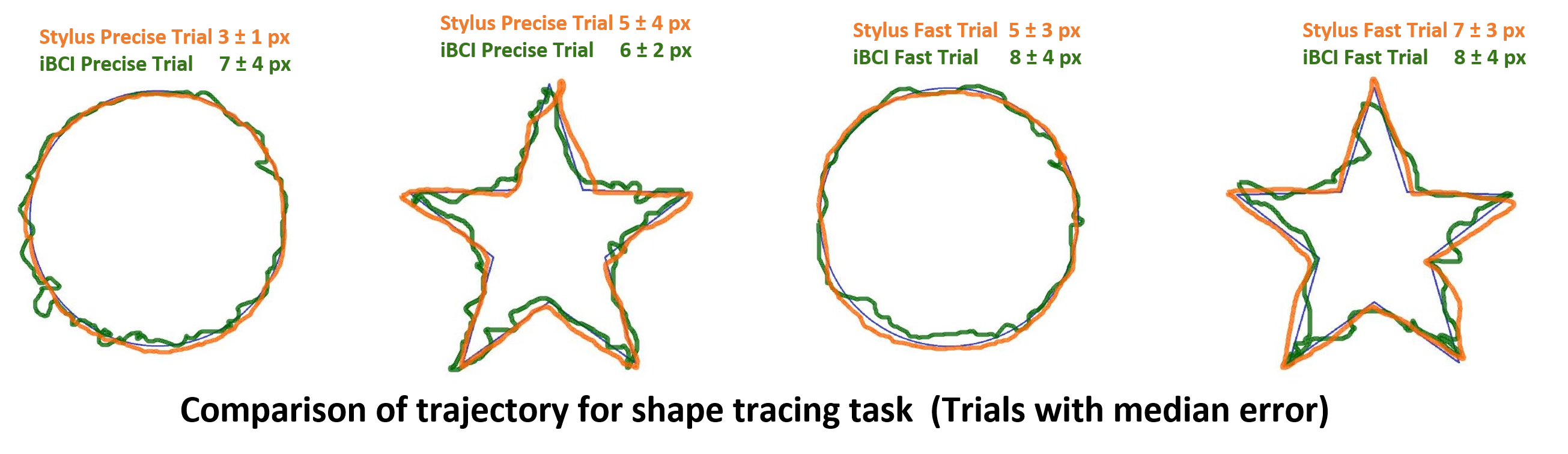

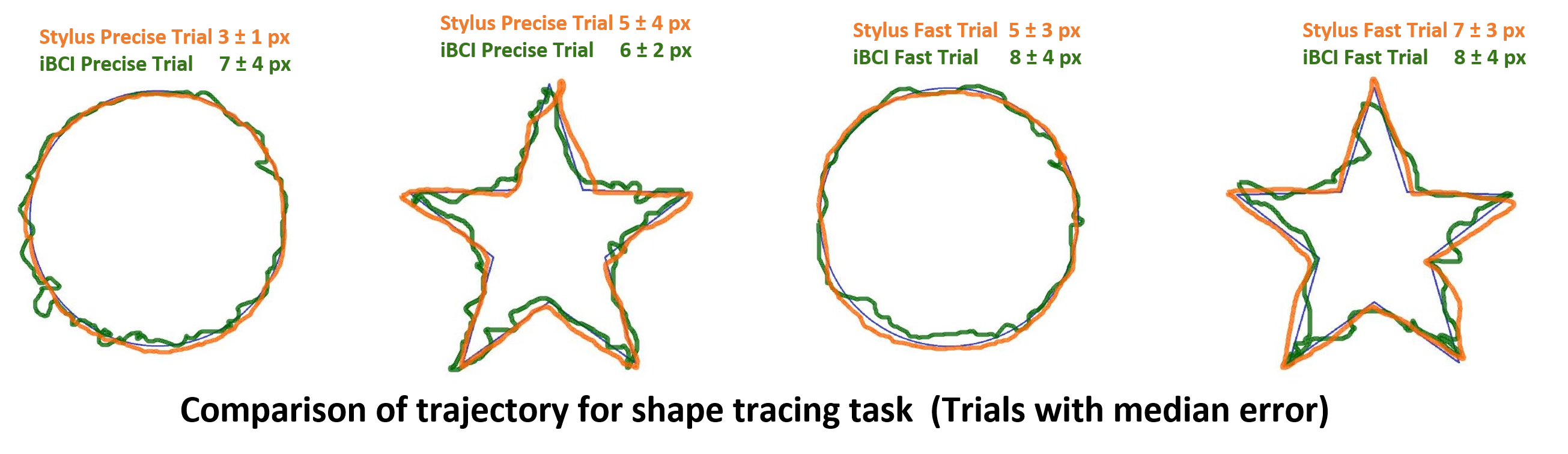

The participant was able to use a 3 DOF decoder to complete the center-out task and the shape tracing task. The user experience assessment revealed high effectiveness (accuracy, low error) and an overall good match between the user and the assistive device which suggests the user was rather satisfied with the system when assessed using various questionnaire.

Fig: Shape tracing accuracy result comparison of BCI control and Stylus control.

Date: December 2018

Personal Healthcare Companion.

{Computer Vision, Deep Learning}

Tadashi was chosen to be one of the Top 20

Healthcare solutions in GE Healthcare Precision Challenge 2018.

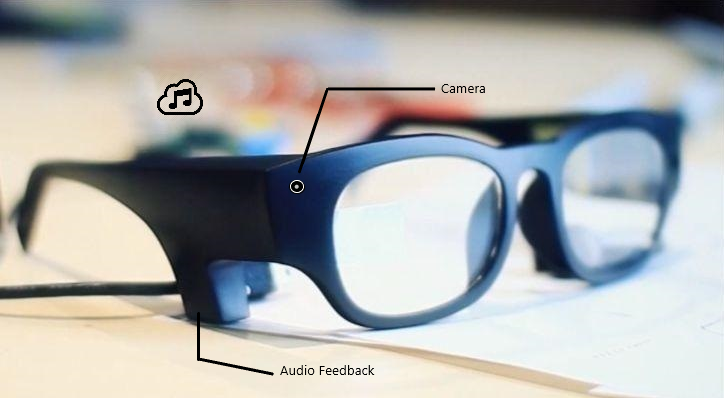

Tadashi is a Personal Healthcare Companion which identifies and monitors the food intake of the user

and gives a feedback on his food lifestyle. This feedback includes immediate and weekly feedback which

help in decision making, self-realization and provides the user with an additional motivation to

continue with a healthy lifestyle. This is done by immediate audio feedback, tracking food intake

automatically and using a gamification approach by maintaining a leaderboard which is affected by

healthy and unhealthy food habits.

In this way, Tadashi help the user by helping with immediate decision making to switch to avoid

unhealthy diet, helps the user with tracking their food habits and also motivates the user to follow

the streak of sticking to a healthy diet.

Fig: Smart glass as the chosen form factor with camera and bone conduction audio feedback

Fig: Tadashi detecting pizza

Date: August 2018- December 2018

Restoring Tactile Sensation In Upper Limb Amputees.

{Neural Interface, Peripheral Nerve Stimulation}

The artificial limbs can play an essential role in the rehabilitation of amputees. The existing state-of-the-art prosthesis is able to aid an amputee’s ability to regain lost function but lacks the ability to advance the tactile sensation to the human brain.Tactile sensing provides valuable insight into the environment in which we interact with. For upper limb prosthetic users, the absence of sensory feedback impedes efficient use of the prosthesis. In our work, we studied the use of noninvasive, targeted Transcutaneous Electrical Nerve Stimulation(TENS) to provide sensory feedback to the upper limb amputees. We were able to classify sensation in different surface areas of the palm where effective nerve stimulation was possible. We also developed a closed-loop system to automatically provide an electrical stimulation to the nerves whenever the prosthetic limb records a touch. We discovered that all the subjects were able to differentiate between the different areas of palm when different peripheral nerves were stimulated. We also exlored different stimulation parameters and found that a higher stimulation frequency provided a more natural sensation when provided a frequency of upto 500Hz whose amplitude was modulated at a lower frequency of 10Hz max due to hardware constraints. This system aims to help improve prosthetic technology so that one day amputees will feel as if their device is a natural extension of their body.

Fig: Testing on subject-6

Fig: Electrode Placement (Median and Ulnar

Nerve)

Date: October 2018- December 2018

Plant-Human Interface

{ Plant-Sensors, RaspberryPi, Peripheral Nerve Stimulation }

Botanical Touch was developed to explore transhumanism to expand the horizons beyond our five senses.

The system allows us to perceive a different sense of touch- from a plant, helping us in developing a

deeper connection to the plants and in turn, with nature.

The Plant act as a natural sensor which generates an impluse on being touched. This impluse is

amplified and transmitted to the microcontroller which drives the TENS machine enabling the user to be

able to feel tactile sensation by peripheral nerve stimulation when the plant is being touched. I

believe this would help us bring closer to nature and thus work towards protecting it.

Date: May 2018- July 2018

(MIT Media Lab)

Interfacing with Dreams to Augment Human Creativity

{ Signal Processing, Machine Learning , Python }

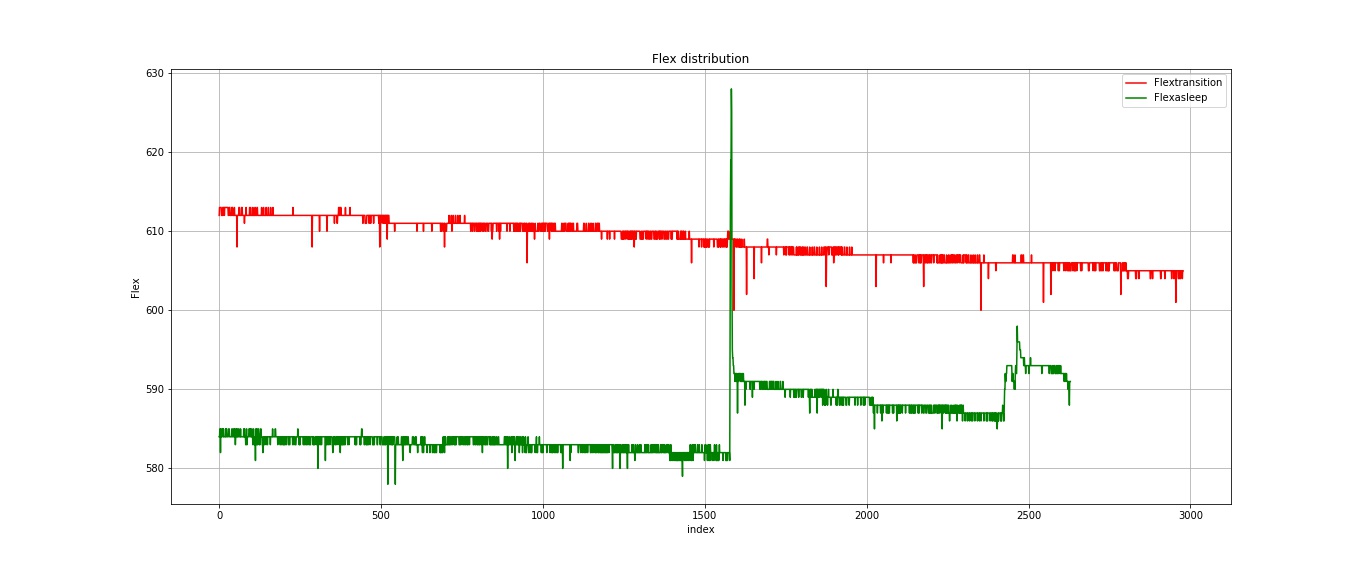

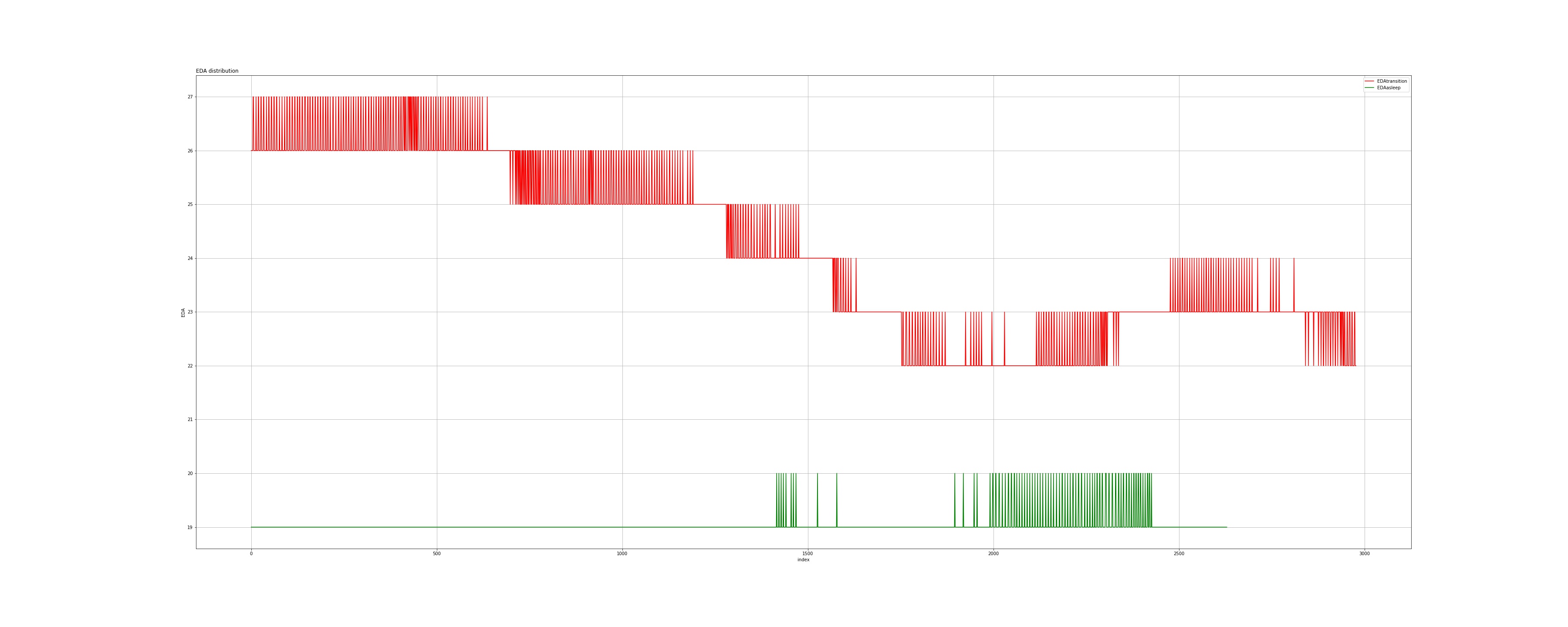

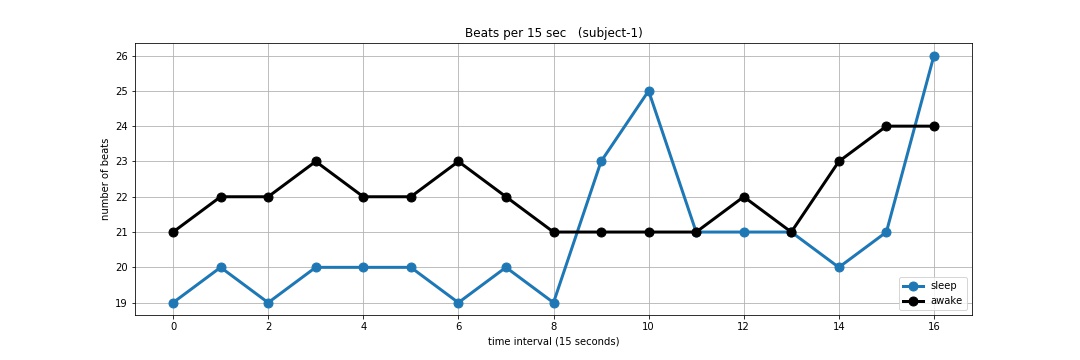

This system allows for access to semi-lucid

sleep state-Hypnagogia, that can successfully influence, extract information from, and leverage

cognition happening during the early stages of sleep in order to augment human creativity. Famous

personalities like Edison and Tesla used a steel ball which napping to access this sleep state. Dormio

uses sensors that detect Heart Rate Variability, Electrodermal Activity and Muscle Tone to identify

this stage and sending these signal in real-time to the computer device.

This creates an opportunity to automate the process of influencing and extend early stage dreaming

state called Hypnagogia. Thus my work was to detect this sleep state. I used Machine Learning and

Signal Processing which could classify the Hypnagogic sleep with Non-Hypnagogic sleep.

Fig: Flex Sensor Signal of Awake and Asleep Subject

Fig: EDA Signal of Awake and Asleep Subject

Fig: BPM plot of Awake and

Asleep Subject

Date: August 2017 - October 2017

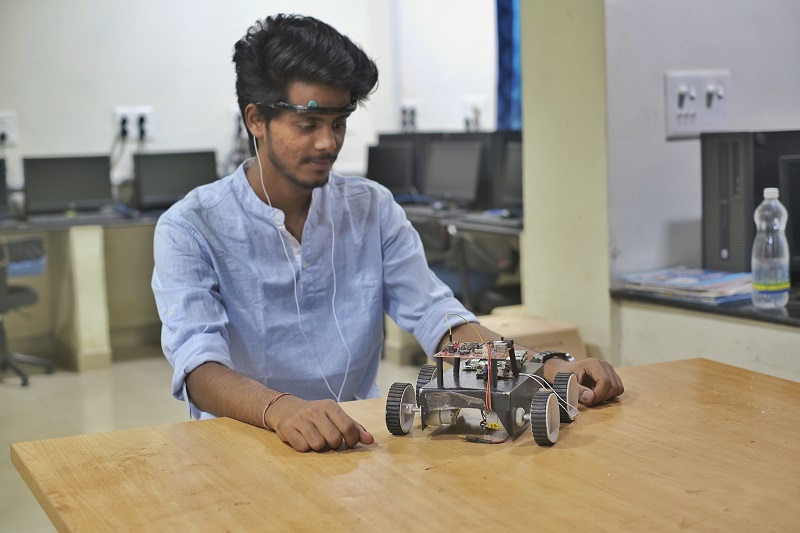

Mind-Controlled Robot

{ Brain-Computer Interface, Signal Processing, Arduino, LabView }

The field of brain-computer interface is a driving force for utilizing electroencephalography

technology, which is the process of recording brain activity from the scalp using electrodes. Its main

focus has been about developing applications in a medical context, helping paralyzed or disabled

patients to interact with the external world. In this regard, A prototype of a brain-controlled robot

was prepared which was controlled by the eye blinking artifact in the EEG signals, in real time.

BrainSense acts as an inception for developing a vehicle, or wheelchair allowing patients with motor

disability to control it using their minds through Brain-Computer Interfaces. We designed and

developed a Two-Channel EEG acquisition system and the electrodes were placed on the prefrontal

cortex. Signal Processing was performed to reduce noise and unwanted features first by hardfare

filters and secondly using the NI-Labview Software. A mechanism to ignore the involuntary eye blinking

was considered to give user a better control over the system. The

While working on the project we faced a problem, the system was responding to the unwanted involuntary

eye-blink of the user. As a solution, an algorithm was developed in order to ignore the single

involuntary eye blink which always occurs simultaneously in both eyes and a different parameter was

set, in order to start the motor.

The model won the first price in “The National Science Exhibition-2017”

Fig: System Setup

Fig: Pre-Amplifier Circuit

Date: May 2017 - June 2017

(AIIMS Raipur)

Sleep Monitoring and Manipulation System

{ EEG Signal Processing, Auditory Stimulation}

High quality sleep is essential for a human being to recover from tiredness and to maintain good

health. Sleep deprivation due to sleep-related disorders such as hypertension, atherosclerosis and

coronary artery disease may introduce severe physical effects, e.g. cognitive impairments and mental

health complications. Many previous pieces of research have found that playing sounds synchronized to

the rhythm of the slow brain oscillations while sleep enhances these oscillations, thus improving the

quality of sleep and boosts their memory

Engineering Sleep is a system for measuring cerebral activity to recognize deep sleep by detection of

delta waves and stimulate synchronized auditory signals to enhance the quality of sleep and improve

memory.

The system could also track EEG,ECG and respiration rate and thus could also be used to detect sleep

apnea and track insomnia.

This was my first effort, in designing a closed loop human-machine interaction for expanding human

cognitive abilities and now wanted to work on the individual aspect of HCI

Date: August 2018

Plant-Computer Interface

{ Plant-Sensor, RaspberryPi}

This was a fun project which got its inspiration from the shouting plant-Mandrake in the Harry Potter movies. I thought it would be very cool to be able to give voice to plants. Recently I found out a way detect electrical impulses travelling through the plant just like humans and decided to use it as a trigger to play a recording. Whenever the Touch-Me-Not plant is touched the plant transmitts the impulse to the RaspberryPi which generates a preloaded sound via bluetooth speaker.

Fig: Electrodes placed on

the plant

Date: August 2018- December 2018

Autonomous Biomimetic Marine Drone

{ Biomimetic Robotics, Arduino, Biomechanics}

Underwater exploration has been an important preoccupation of researchers for a long time. In terms of

scientific results, most exploration missions of such kind produce immense data of critical

importance. From the discovery of new life forms in areas we earlier thought were inhabitable to

assessing the impact of climate change on coral reefs or environmental monitoring, underwater

exploration has loads of benefits. For these applications and as an exercise in mechanical

prototyping, a 3-D printed model of fish was prepared inspired by the manoeuvring mechanisms of real

fishes. The model was designed in Google Sketchup and printed using a 3-d printer. The body of the

SmallMouth is divided into three parts so that each could be controlled separately and the motion of a

solid object can reproduce the undulation motion of a real fish. It also had obstacle dodging

capability which was achieved by Sensors attached in the front and using a microcontroller.

In the long run, with small additions SmallMouth could replace the existing Autonomous Underwater

Vehicles for marine externalploration mission and could also be used by Defence system for

surveillance.

The model won second prize in IEEE-Skills and Knowledge

Enhancement Program-2016.

Fig: Sketchup Model of SmallMouth at different stages

Date: May 2019 - August 2019

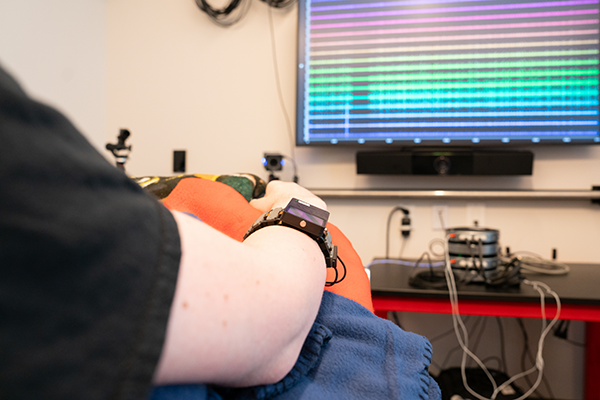

Portable Intracortical Brain-Computer Interface System

Fig: Participant using Portable iBCI system

Intracortical brain-computer interface (iBCI)

research has demonstrated the ability for peoplewith paralysis to control computers. However, most of

these demonstrations have been limitedto lab settings and there has been a gap in bringing it to the

end-user. The majority of thesestudies have evaluated the system based on its usability. However,

these cover only a part ofuser experience namely the pragmatic qualities, and not its hedonic

qualities. In order to designa system that is user centered, there needs to be an iterative design

process between thedeveloper and the user, which addresses the whole user experience.

I worked on the graphical user interface and development of the interface between differentsoftware

modules for controlling a tablet PC through brain computer interface. The goal was totransition from

an experiment system, where the developer setup the task for the participant to asystem available to

an end user where the participant to can independently setup (maybe withthe help of the user) and use

the system at home. When the user was able to use the system, itwas evaluated addressing the whole

user experience. The iBCI user experience was thencompared to that of touch screen input by a

participant with tetraplegia who retained the use ofhis proximal arm but had impaired hand function.

Fig: Graphical User Interface of the system and painting made by the participant using BCIcontrol

The participant was able to use a 3 DOF decoder to complete the center-out task and the shape tracing task. The user experience assessment revealed high effectiveness (accuracy, low error) and an overall good match between the user and the assistive device which suggests the user was rather satisfied with the system when assessed using various questionnaire.

Fig: Shape tracing accuracy result comparison of BCI control and Stylus control.

Date: August 2019 - Present

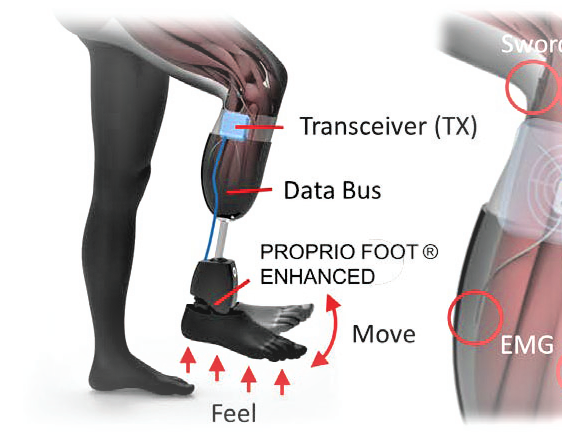

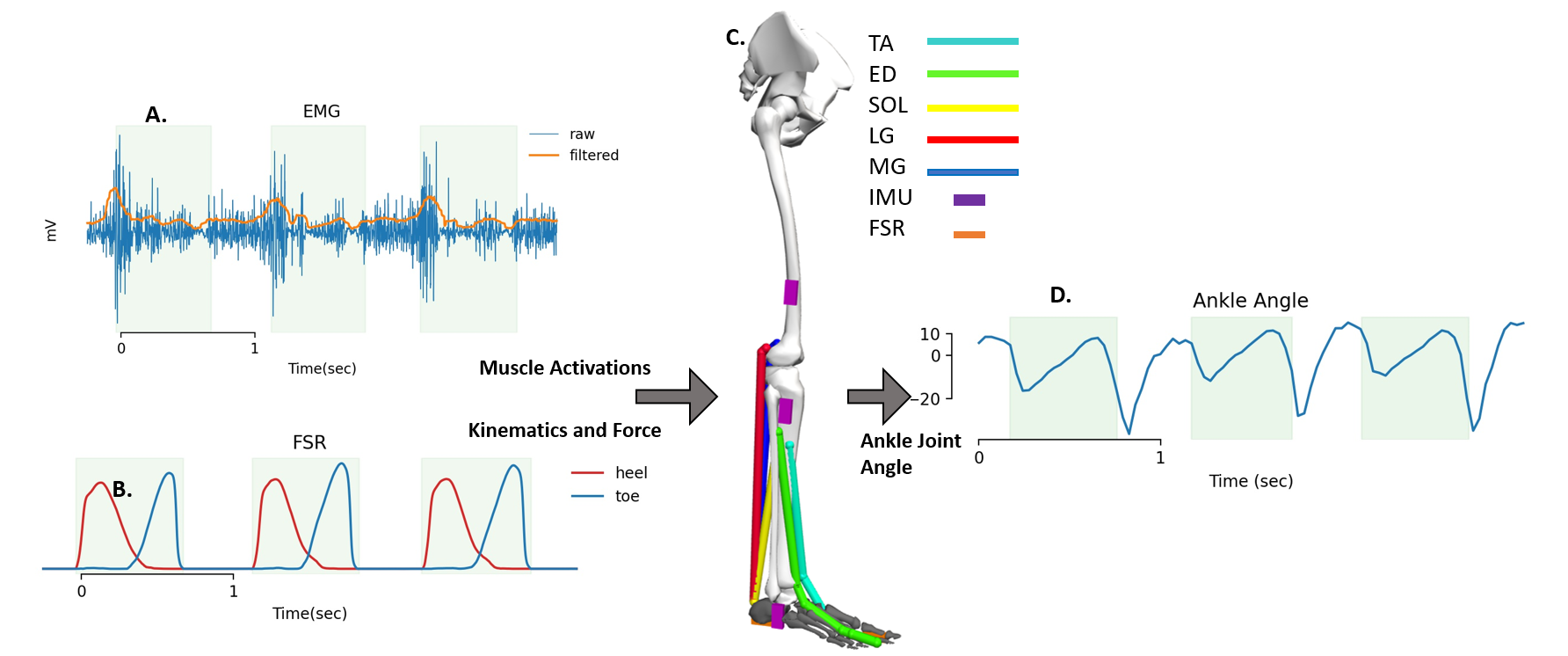

Real-time neuromusculoskeletal model for myoelectric control of ankle prosthesis

Fig: Schematic representation of musculoskeletal model framework . A) Sample raw and filtered EMG signal B Signal from FSR sensor to detect swing phase of gait C) Musculoskeletal model schema with IMU placement and muscles. D) Predicted ankle angle as an output of the model.

Lower-limb amputees account for about 70% of an

estimated 185,000 new amputations

in the U.S. each year and 1 million worldwide. Current prosthetic systems lack natural

feedback and joint control, which, if available, are expected to improve prosthetic use,

mobility, balance and decrease limb and phantom pain. In this work we aim to address

this issue and my contribution is mainly in developing EMG decoding algorithm to

control ankle foot prosthesis with muscle activity and IMU.

In this study, a real-time neuromusculoskeletal(NMS) model of the lower limb with two degrees of freedom at knee and ankle joint was developed

to receive EMG and limb kinematic signals as input and predict ankle motion as output during target following tasks and swing phase of gait. Our results indicated that the real-time NMS model predicts

accurate ankle motion for various target speeds and positions. We also show the performance of the model in predicting ankle kinematics during the swing phase of motion and found mean peak correlation of 0.95

between predicted and measured motions across all subjects. The proposed modeling framework can be used for developing powered ankle prosthesis for transtibial amputees.

Fig: (Left) Testing the EMG-driven muscoskeletal model on healthy individuals. (Right) Validating the model on participant with lower limb amputation

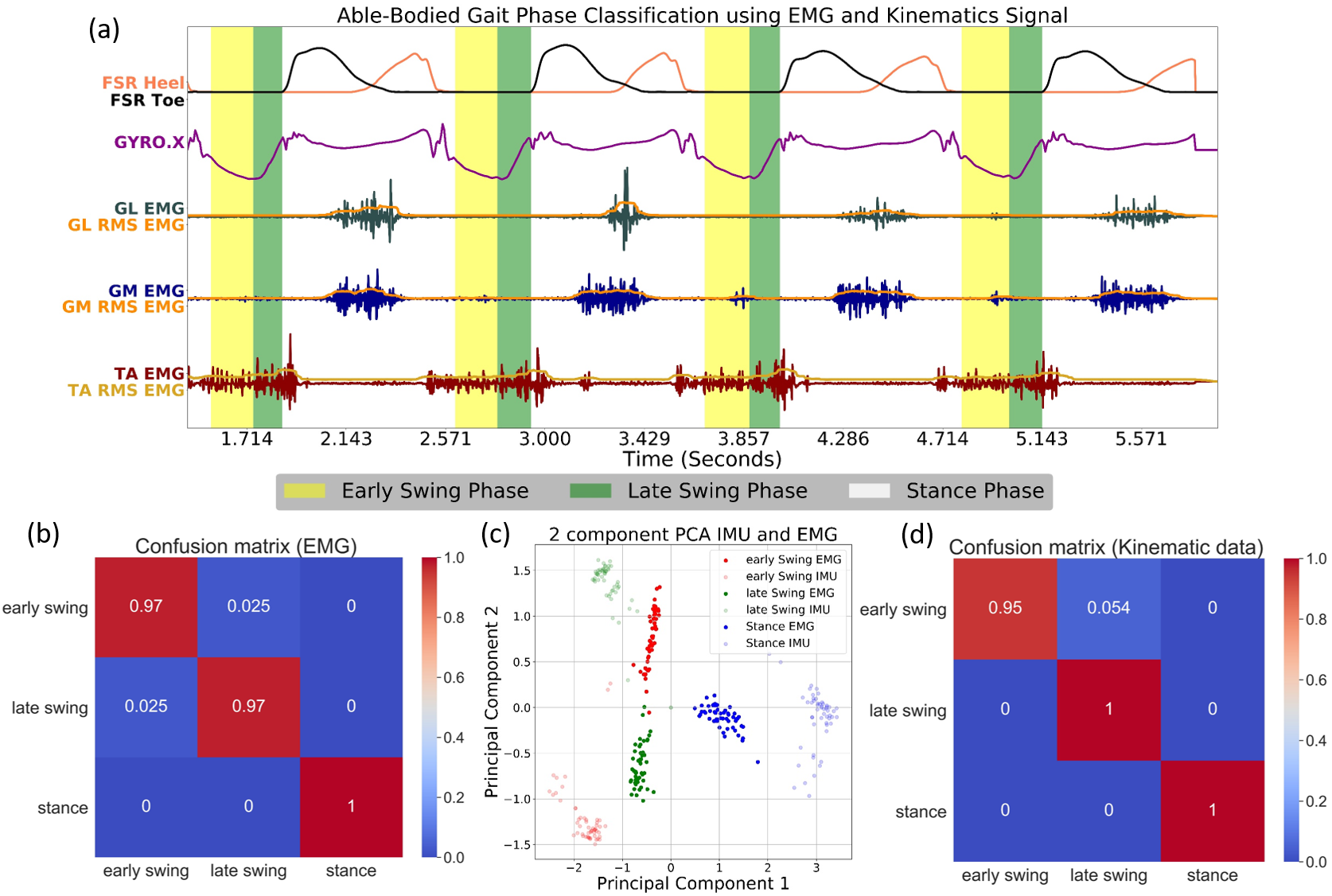

Classifying Gait phases using IMU and EMG signal

Early Swing and Stance Phase were identified by the determining heel strike and toe-off

timestamp using the Force sensor placed on the heel and great toe. The Early and the

Late Swing phase transition was identified by the rotation of the shank along the

Talocrural joint axis using the X-axis of the Gyroscope placed on the Achilles tendon.

These Gait Phase identification parameters were used to label the data for performing a

supervised learning classification. The features used from the Kinematic data were the

mean of the maximum 20 sample values and the mean of the minimum 20 sample

values. The classification model used was Random Forest with 25% of the total data

used as the training data and the other 75% as the testing data. The classification

performed based on only Kinematic data yielded a total classification accuracy of 97%.

The EMG features used for classification were, the mean RMS value in each phase, the

maximum RMS value in each phase, the mean of the maximum half sample values of

the RMS EMG, and the mean of the minimum half sample values of the RMS EMG. The

classification using the Random Forest Classifier yielded 97% accuracy when 30% of

the total data was used as training data and the other 70% as testing data. For each of

the Kinematic and EMG data, dimensionality reduction was performed by finding two

principal components

Fig: Able Bodied Gait Phase classification (a) Time series Muscle Activations and alocrural joint axis Gyroscope signal with identified gait phases marked. (b) Confusion Matrix for classification of gait phases using only Kinematics data. (c) Comparison of Normalized 2 Principal Components of Kinematics data and EMG data. (d) Confusion Matrix for classification of gait phases using only EMG data.

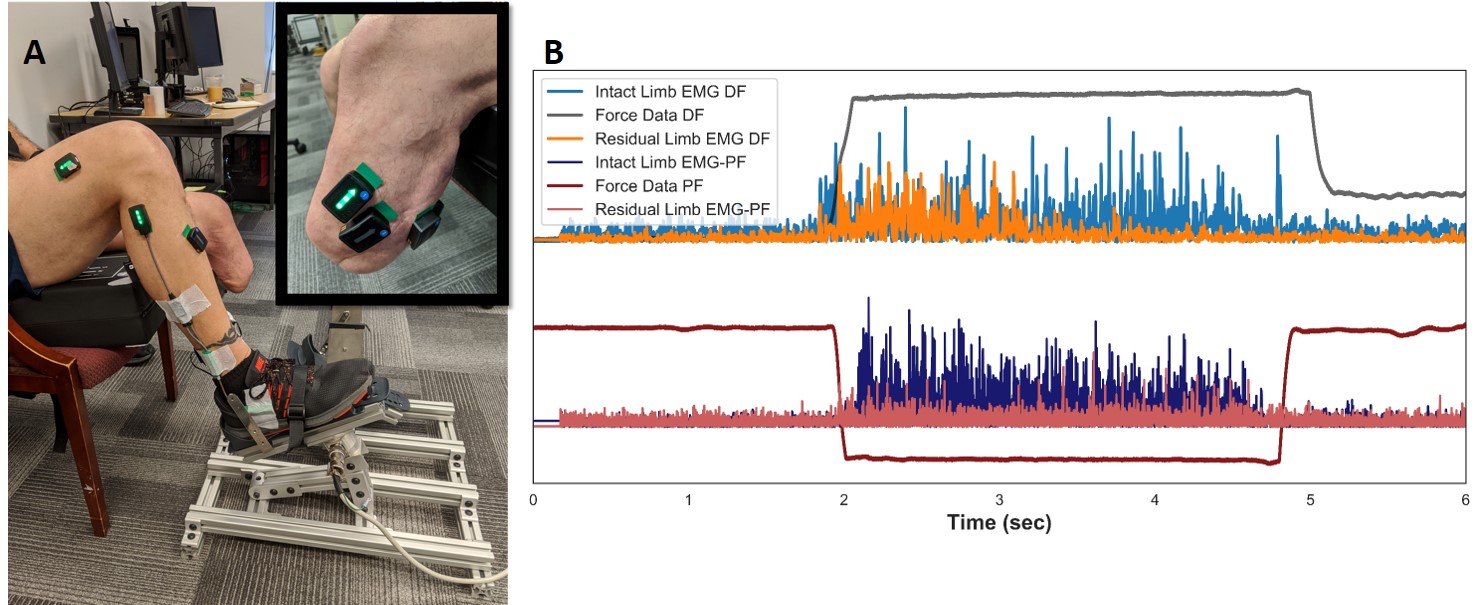

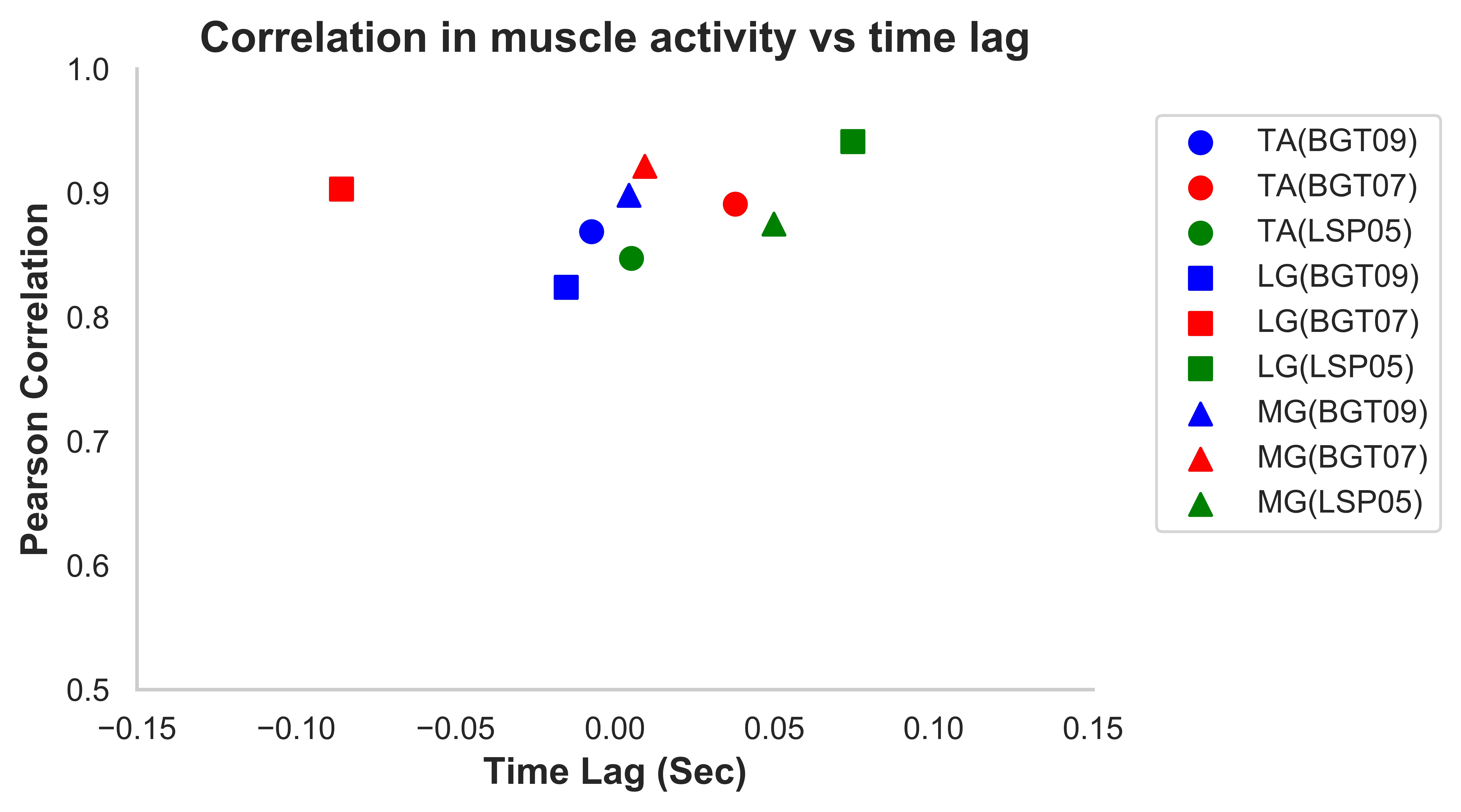

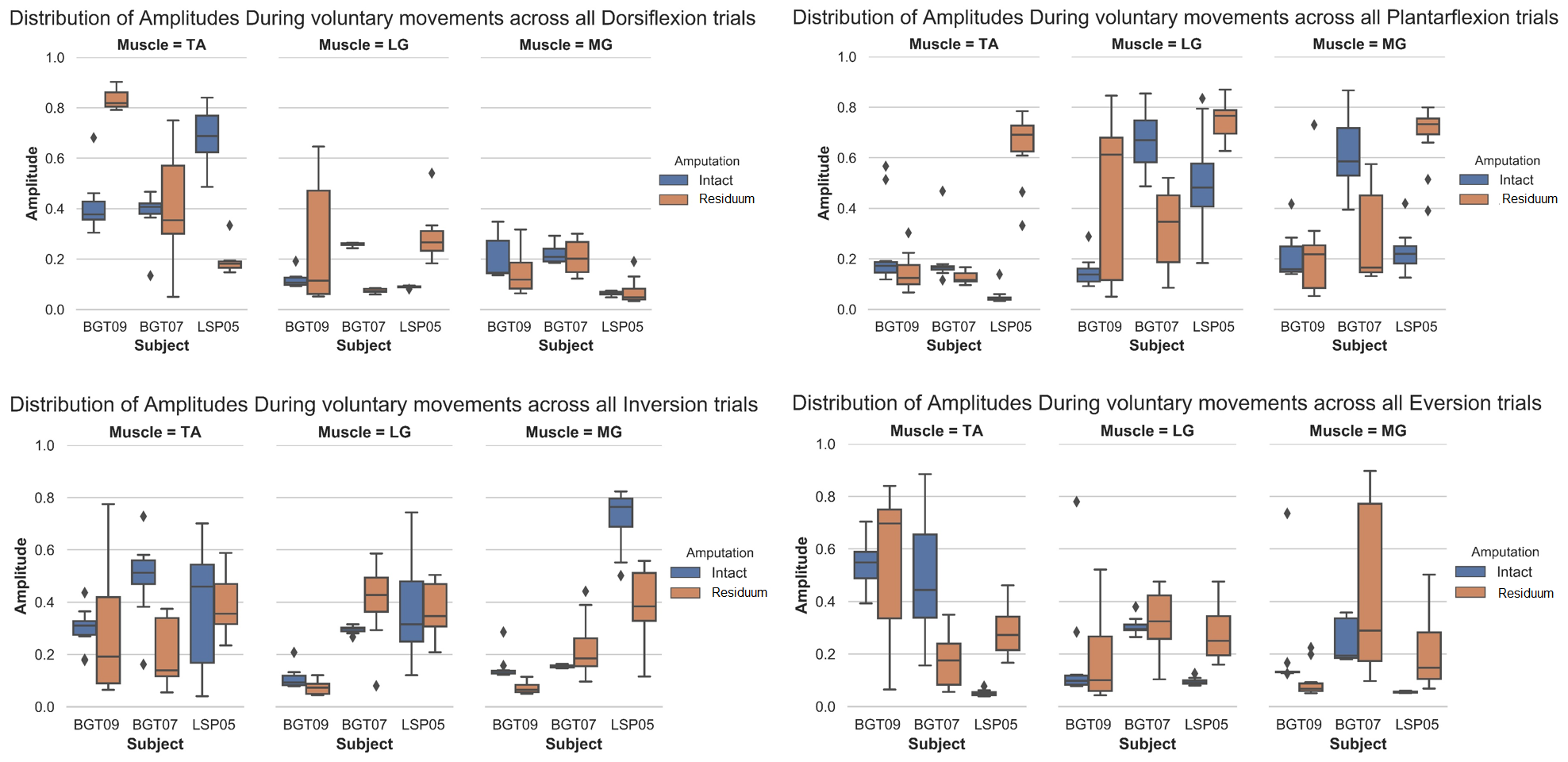

In the next study, we examined the differences in muscle recruitment between the intact and residual limbs of three transtibial amputees with the aim of characterizing voluntary recruitment patterns. This study’s objective is to characterize patterns of residual muscle recruitment by assessing the spatial and temporal corecruitment of muscles on the residual limbs of transtibial amputees while performing volitional isometric movements (dorsiflexion, plantarflexion, eversion, and inversion). We aim to analyze the pattern of recruitment of residual muscles in comparison with the intact limbs. We report that while there is variability across subjects, there are consistencies in the muscle recruitment patterns for the same functional movement between the intact and the residual limb within each individual subject. These results provide insights for how symmetric activation in residual muscles can be characterized and used for better control of myoelectric prosthetic devices in transtibial amputees.

Fig: Experimental setup with Ankle Torque Rig

and EMG Placements: A) Subjects were aligned with their knee at 90 degrees to isometrically move

their intact ankle. EMG Sensors were placed over their major muscles on both limbs with a goniometer

at the intact ankle. B) Example traces of processed EMG data from the primary actors of the intact

and residual limb during Dorsiflexion (DF) and Plantarflexion (PF) are shown.

Figure 2: Temporal alignment and EMG amplitude correlations between the 2 limbs. Mean-max correlations of the Intact vs Residual limb muscles across all movements for each subject are shown against the time-lag at the time of maximum correlation. Each symbol represents a muscle type and each color represents a subject.

Figure 3: Comparison of Recruitment by Muscle and Activity. Distribution of normalized RMS signal during the hold period. A, B, C, and D is for each movement that is Dorsiflexion, Plantarflexion, Inversion and Eversion. Each diamond is the outlier.